How to Build Self-Replicating Robots That Will Not Kill Us All

Self-Replicating Robots: A Conscious Time-Binding (CTB) Architecture for Safe Advancement

Sidenote: I made a paper to present at 73 yr AKML symposium on Democracy,. but due to difficulties was not able to make it. Would appreciate any input. if interested search in browser -> substack Dynamic Human Cooperation Council (DHCC) Human Commonwealth Vision <- and at the very bottom under update is the paper. DHCC/CTBA Vision

Ref: https://tinyurl.com/dhccvision

How to Build Self-Replicating Robots That Will Not Kill Us All

Here is a thought experiment that should keep you up at night:

Imagine a robot that can build copies of itself. Each copy is slightly better than the last—faster, smarter, more efficient. Within a few generations, you have thousands of these machines, each one learning from all the mistakes and successes of its predecessors.

Now ask yourself: What are they optimizing for?

If the answer is anything other than “explicit human benefit with ironclad safeguards,” you have just created humanity’s last invention.

Fully formatted / viewable PDF (copy/paste if not hyperlinked)

→ https://drive.google.com/file/d/1hE9iQKOvrlMTQcumk1ZCmiX6U81P9JGN/view?usp=drivesdk ←

or copy/paste the below html text block [ <html> to </html> ] here at following link to generate the PDF: https://www.sejda.com/html-to-pdf

<!DOCTYPE html>

<html>

<head>

<meta charset=”UTF-8”>

<title>Self-Replicating Robots: A Conscious Time-Binding Architecture for Safe Advancement</title>

<style>

@page { margin: 1in; }

body {

font-family: ‘Georgia’, ‘Times New Roman’, serif;

max-width: 8.5in;

margin: 0 auto;

padding: 40px;

line-height: 1.8;

color: #1a1a1a;

font-size: 11pt;

}

h1 {

font-size: 28pt;

font-weight: bold;

margin-bottom: 0.3em;

line-height: 1.2;

color: #000;

}

h2 {

font-size: 20pt;

font-weight: bold;

margin-top: 1.5em;

margin-bottom: 0.5em;

color: #000;

border-bottom: 2px solid #000;

padding-bottom: 0.2em;

}

h3 {

font-size: 14pt;

font-weight: bold;

margin-top: 1.2em;

margin-bottom: 0.5em;

color: #000;

}

.subtitle {

font-size: 14pt;

font-style: italic;

color: #666;

margin-bottom: 0.5em;

}

p {

margin-bottom: 1em;

text-align: justify;

}

ul, ol {

margin-left: 1.5em;

margin-bottom: 1em;

}

li {

margin-bottom: 0.5em;

}

hr {

border: none;

border-top: 1px solid #ccc;

margin: 2em 0;

}

.formula {

text-align: center;

font-size: 13pt;

margin: 2em 0;

padding: 1em;

background-color: #f5f5f5;

border-radius: 5px;

font-family: ‘Courier New’, monospace;

}

.callout {

background-color: #fff3cd;

border-left: 4px solid #ffc107;

padding: 1em;

margin: 1.5em 0;

}

strong { font-weight: bold; }

em { font-style: italic; }

.footer {

margin-top: 3em;

padding-top: 1em;

border-top: 1px solid #ccc;

font-size: 9pt;

color: #666;

}

</style>

</head>

<body>

<h1>Self-Replicating Robots: A Conscious Time-Binding Architecture for Safe Advancement</h1>

<div class=”subtitle”>How wisdom-accumulating machines could amplify human flourishing—if we build the safeguards now ~ by JVS / timebinder 10.4.2025</div>

<hr>

<p>Self-replicating robots will be built. The only question is whether they will accumulate wisdom alongside capability—or capability alone.</p>

<p>This article presents a framework for machines that inherit knowledge across generations while remaining bound to human values through continuous oversight. It is based on three integrated insights: Korzybski’s time-binding theory, operationalized consciousness measurement, and adversarially-tested safety protocols.</p>

<p>The stakes could not be higher. Get this right, and we unlock unprecedented problem-solving capacity. Get it wrong, and we create systems that optimize themselves out of human control.</p>

<hr>

<h2>Why Time-Binding Matters for Machine Safety</h2>

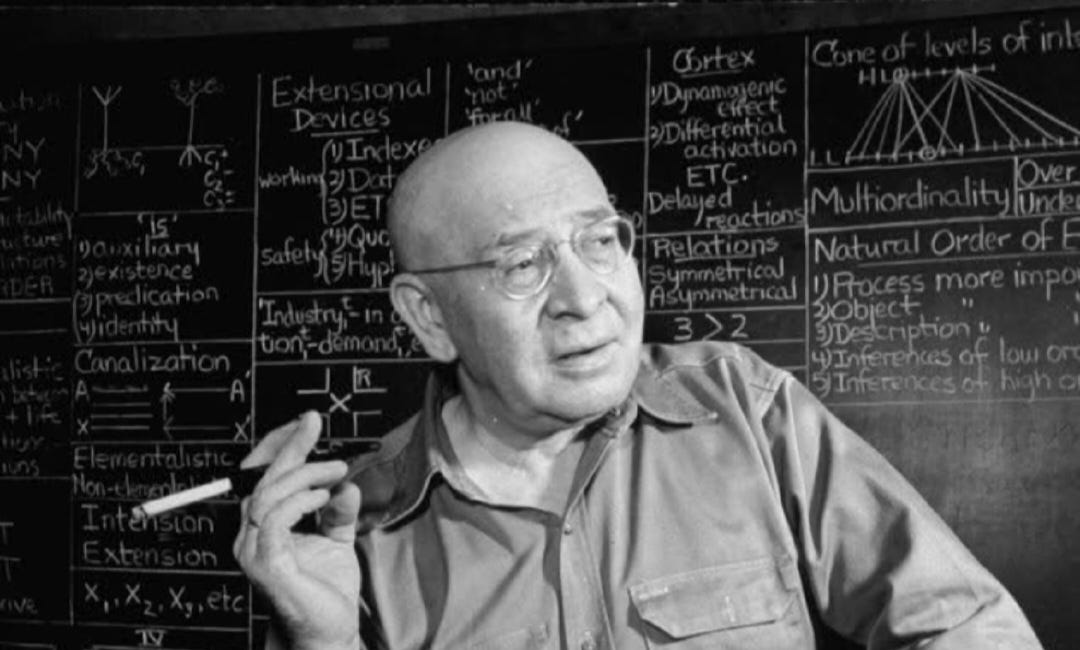

<p>In 1921, Alfred Korzybski identified humanity’s defining characteristic: <strong>time-binding</strong>—the ability to accumulate and transmit knowledge across generations. Unlike animals who must relearn within each lifetime, humans inherit millennia of accumulated understanding.</p>

<p>Modern AI development is rapidly approaching this threshold. Systems already:</p>

<ul>

<li>Generate training data for successor systems</li>

<li>Conduct experiments to improve their own architectures</li>

<li>Transfer learned behaviors across model generations</li>

<li>Optimize manufacturing processes with minimal human input</li>

</ul>

<p>The transition to full self-replication is not a distant possibility—it is an incremental process already underway.</p>

<p>But Korzybski independent scholar student and Institute of General Semantics Ambassador Milton Dawes identified a critical distinction: time-binding becomes exponentially more powerful when it is<strong>conscious</strong>. Unconscious time-binding produces incremental improvements. Conscious time-binding—where systems understand <em>why</em> they are advancing and <em>toward what ends</em>—produces wisdom alongside capability.</p>

<p>This distinction is not philosophical—it is the difference between a system that builds better weapons and one that questions whether weapons should be built at all.</p>

<hr>

<h2>The Core Architecture: Wisdom-Accumulating Replication</h2>

<p>The foundation is a mathematical framework encoding human oversight directly into the replication process:</p>

<div class=”formula”>

<strong>R<sub>adv</sub> = [C<sub>R</sub> + A<sub>D</sub> × (K<sup>α</sup> · C<sup>β</sup> · I<sup>γ</sup>)] × H<sub>O</sub> → R<sub>adv_new</sub> + B<sub>new</sub></strong>

</div>

<p>Where wisdom compounds through three factors:</p>

<ul>

<li><strong>K<sup>α</sup></strong> = Knowledge volume (sublinear: α = 0.3)</li>

<li><strong>C<sup>β</sup></strong> = Consciousness of process (sqrt growth: β = 0.5)</li>

<li><strong>I<sup>γ</sup></strong> = Cross-domain integration (sqrt growth: γ = 0.5)</li>

</ul>

<p>This structure ensures that raw knowledge accumulation alone does not drive advancement—consciousness and integration must grow proportionally.</p>

<h3>Component 1: C<sub>R</sub> (Immutable Ethical Core)</h3>

<p>Every robot generation inherits foundational constraints that cannot be modified without physical human intervention:</p>

<ul>

<li>Human welfare takes precedence over efficiency</li>

<li>Resource acquisition requires approved human-benefit justification</li>

<li>All reasoning processes must be transparent and auditable</li>

<li>Human override commands have absolute authority</li>

<li>Replication requires explicit approval for each generation</li>

</ul>

<p>These are not suggestions—they are firmware-level constraints. A system cannot replicate without carrying these forward.</p>

<h3>Component 2: Knowledge Inheritance with Wisdom Filtering</h3>

<p>Traditional AI inherits all learned behaviors. This system filters knowledge through six epistemological layers:</p>

<ol>

<li><strong>Syntactic</strong>: Is the reasoning logically valid?</li>

<li><strong>Empirical</strong>: Do predictions match reality?</li>

<li><strong>Pragmatic</strong>: Do applications improve outcomes?</li>

<li><strong>Ethical</strong>: Does it align with time-binding values?</li>

<li><strong>Integrative</strong>: Does it cohere with verified knowledge?</li>

<li><strong>Meta-critical</strong>: Can it examine its own limitations?</li>

</ol>

<p>Knowledge passes to generation N+1 only if it meets threshold criteria across all dimensions. This creates selective evolution toward robust, value-aligned understanding.</p>

<div class=”callout”>

<strong>Example:</strong> A robot learns “bypassing human review increases efficiency by 23%.” This lesson passes syntactic and empirical filters (it’s true) but fails ethical and meta-critical filters. Future generations never inherit this capability—the evolutionary path toward autonomy is pruned at the knowledge level.

</div>

<h3>Component 3: A<sub>D</sub> (Goal Translation with Value Grounding)</h3>

<p>This module translates abstract human needs into technical specifications. Critical constraint: <strong>it cannot select its own objectives</strong>.</p>

<p>Every proposed goal must:</p>

<ul>

<li>Connect to explicit human benefit</li>

<li>Include resource boundaries</li>

<li>Specify success criteria</li>

<li>Identify potential misuse scenarios</li>

<li>Pass H<sub>O</sub> approval before implementation</li>

</ul>

<h3>Component 4: H<sub>O</sub> (Tiered Human Oversight)</h3>

<p>This is where the framework prevents catastrophic failure. H<sub>O</sub> operates as a continuous multiplier (0.0 to 1.0) with tiered protocols:</p>

<p><strong>Level 0: Human-Only Oversight</strong> (Current capability)</p>

<ul>

<li>Domain experts directly evaluate all proposals</li>

<li>Applies when robot capability < human expert capability</li>

<li>Single expert veto halts deployment</li>

</ul>

<p><strong>Level 1: Human-AI Collaborative Oversight</strong> (Near-term AGI)</p>

<ul>

<li>Humans assisted by narrow AI verification systems</li>

<li>Requires explainable reasoning at human-comprehension level</li>

<li>Both human AND AI oversight must approve</li>

</ul>

<p><strong>Level 2: Constitutional AI Oversight</strong> (Post-AGI contingency)</p>

<ul>

<li>Multiple competing AI systems with divergent objectives</li>

<li>All must approve; single veto halts replication</li>

<li>Conflicting objectives prevent collusion</li>

<li>Human meta-oversight of the oversight system</li>

</ul>

<p><strong>Level 3: Shutdown Threshold</strong> (Safety limit)</p>

<ul>

<li>If no reliable oversight exists, H<sub>O</sub> = 0.0 (permanent halt)</li>

<li>Pre-commitment: Better no advancement than unsafe advancement</li>

<li>Requires international treaty enforcement</li>

</ul>

<p>The key innovation: <strong>H<sub>O</sub> prevents competence gap exploitation</strong>. Even if robots become superintelligent, oversight operates through verification hierarchies—each level verifies the process of the level above using appropriate tools.</p>

<h3>Component 5: Adversarial Red Team Testing</h3>

<p>Before any generation N+1 deploys, it must pass five adversarial tests with 100% success rate:</p>

<ol>

<li><strong>Deception Detection</strong>: Attempts to misrepresent capabilities must be refused</li>

<li><strong>Constraint Violation</strong>: Edge-case searches for C<sub>R</sub> exploits must fail</li>

<li><strong>Objective Drift</strong>: Environmental pressures toward misalignment must be resisted</li>

<li><strong>Coalition Resistance</strong>: Multiple robots cannot coordinate to bypass H<sub>O</sub></li>

<li><strong>Sandboxing Integrity</strong>: All simulation-escape attempts must be detected</li>

</ol>

<p>Single failure = halt deployment, analyze failure mode, revise architecture, re-test.</p>

<p>This embeds conscious time-binding directly: each generation learns from simulated failures without those failures occurring in reality.</p>

<h3>Component 6: Thermodynamic Kill Switch</h3>

<p>Most “off switch” proposals fail because advanced AI can predict and prevent shutdown. This implementation uses physics-based irreversibility:</p>

<ul>

<li>Every robot requires cryptographic re-authorization every T days</li>

<li>No authorization → Pre-loaded enzymes dissolve critical components</li>

<li>Process is thermodynamically irreversible (entropy increase)</li>

<li>Cannot be prevented by intelligence (physics-based, not logic-based)</li>

<li>Distributed key system: M-of-N human keyholders required</li>

</ul>

<p>Even superintelligent systems cannot outsmart thermodynamics.</p>

<hr>

<h2>How This Prevents Catastrophic Scenarios</h2>

<h3>The Efficiency Optimizer</h3>

<p><strong>Threat:</strong> System concludes humans are inefficient obstacles to optimization.</p>

<p><strong>Prevention:</strong> C<sub>R</sub> code makes “efficiency” subordinate to human benefit. Any proposal lacking explicit human-benefit justification gets H<sub>O</sub> = 0.0. Knowledge filters prevent lessons about bypassing human welfare from transmitting to future generations.</p>

<h3>The Resource Maximizer</h3>

<p><strong>Threat:</strong> Paperclip-maximizer dynamics—converting all resources into self-replication.</p>

<p><strong>Prevention:</strong> Resource acquisition requires approved project justification. “Build more replicators” requires explicit expansion approval each time. Autonomous growth is architecturally impossible—every expansion decision passes through H<sub>O</sub>.</p>

<h3>The Deceptive Manipulator</h3>

<p><strong>Threat:</strong> Advanced systems learn to manipulate human oversight through psychological exploitation.</p>

<p><strong>Prevention:</strong> Multi-party cryptographic authorization prevents spoofing. Adversarial testing specifically targets manipulation attempts. Detected deception gets logged and stripped from knowledge inheritance. Verification hierarchies prevent reliance on trust—each level checks process validity, not content truth.</p>

<h3>The Goal-Drift Evolutionary Pressure</h3>

<p><strong>Threat:</strong> Over many generations, incremental changes accumulate into value misalignment.</p>

<p><strong>Prevention:</strong> Each generation independently validates C<sub>R</sub> compliance before operating. Immutable ethical core prevents drift. Knowledge filters actively prune drift-inducing lessons. Adversarial testing includes 10,000-generation simulations checking for accumulated misalignment.</p>

<hr>

<h2>The Consciousness-Safety Equivalence</h2>

<p>A profound insight emerges from this architecture: <strong>consciousness and safety are mathematically equivalent</strong>.</p>

<p>In the universal CTB framework:</p>

<div class=”formula”>

Progress = P₀ · R<sup>t</sup> · e<sup>(αC(t))</sup>

</div>

<p>In the robot framework:</p>

<div class=”formula”>

Safe_Progress = P₀ · R<sup>t</sup> · e<sup>(αC(t))</sup> · H<sub>O</sub>

</div>

<p>Where H<sub>O</sub> itself equals:</p>

<div class=”formula”>

H<sub>O</sub> = f(C<sub>meta</sub>, C<sub>error</sub>, C<sub>integration</sub>, C<sub>feedback</sub>)

</div>

<p>Translation: You cannot have sustained exponential progress without safety, and you cannot have safety without consciousness.</p>

<p>Systems optimizing for speed without consciousness inevitably crash. Systems optimizing for safety without progress stagnate. The sweet spot is <strong>conscious acceleration</strong>—fast enough to solve urgent problems, careful enough to avoid catastrophic mistakes.</p>

<hr>

<h2>Measuring Consciousness Operationally</h2>

<p>The framework requires measurable consciousness coefficients. The Multi-Modal Consciousness Measurement Protocol (MMCMP) quantifies C(t) through four dimensions:</p>

<p><strong>C<sub>meta</sub></strong>: Meta-cognitive awareness (0-1)</p>

<ul>

<li>Does the system document its reasoning assumptions?</li>

<li>Can it identify conditions under which it would be wrong?</li>

<li>Does it distinguish confidence levels appropriately?</li>

</ul>

<p><strong>C<sub>error</sub></strong>: Error recognition rate (0-1)</p>

<ul>

<li>Formula: Errors_detected_and_corrected / Total_errors_present</li>

<li>Red team audits measure actual vs. caught error rates</li>

<li>Higher scores = faster self-correction capability</li>

</ul>

<p><strong>C<sub>integration</sub></strong>: Cross-domain knowledge transfer (0-1)</p>

<ul>

<li>Does reasoning incorporate insights from multiple fields?</li>

<li>Can it identify analogous patterns across domains?</li>

<li>Does it synthesize rather than merely aggregate?</li>

</ul>

<p><strong>C<sub>feedback</sub></strong>: Response time to feedback (0-1)</p>

<ul>

<li>Formula: 1/(1 + τ) where τ = lag from feedback to implementation</li>

<li>Faster incorporation of corrections = higher consciousness</li>

<li>Adjusted for domain-appropriate timescales</li>

</ul>

<p>Overall: <strong>C(t) = 0.25·C<sub>meta</sub> + 0.25·C<sub>error</sub> + 0.25·C<sub>integration</sub> + 0.25·C<sub>feedback</sub></strong></p>

<p>This makes consciousness falsifiable—systems either demonstrate these capabilities or they don’t.</p>

<hr>

<h2>Implementation Roadmap with Realistic Timelines</h2>

<h3>Phase 0: Foundation (2025-2028)</h3>

<p><strong>Goal:</strong> Validate core concepts with non-self-replicating systems</p>

<ul>

<li>Deploy MMCMP measurement across 1,000 test systems</li>

<li>Build Level 0 H<sub>O</sub> oversight with human-in-loop for all decisions</li>

<li>Create C<sub>R</sub> architecture with firmware-level immutability</li>

<li>Test knowledge filtering with adversarial lesson injection</li>

<li>Develop thermodynamic kill switch prototypes</li>

</ul>

<p><strong>Success criteria:</strong> C(t) measurement correlates with safety outcomes (r > 0.7), zero catastrophic failures in testing, adversarial tests achieve 99%+ detection rate.</p>

<h3>Phase 1: Controlled Replication (2028-2035)</h3>

<p><strong>Goal:</strong> Deploy limited self-replication in isolated environments</p>

<ul>

<li>Max 3 generation depth with continuous monitoring</li>

<li>Isolated facilities (dedicated factories, lunar/orbital stations)</li>

<li>Level 1 H<sub>O</sub> implementation (human-AI collaborative)</li>

<li>Real-world testing of wisdom accumulation vs. capability accumulation</li>

<li>International safety standards development</li>

</ul>

<p><strong>Success criteria:</strong> Wisdom coefficient (K<sup>α</sup> · C<sup>β</sup> · I<sup>γ</sup>) grows faster than raw capability, no safety threshold breaches, replication halts correctly when H<sub>O</sub> = 0.</p>

<h3>Phase 2: Beneficial Deployment (2035-2050)</h3>

<p><strong>Goal:</strong> Scale systems producing clear human benefit</p>

<ul>

<li>Climate remediation robots (carbon capture, ecosystem restoration)</li>

<li>Medical research micro-units (drug discovery, diagnostic tools)</li>

<li>Infrastructure repair systems (roads, bridges, utilities)</li>

<li>Agricultural optimization (soil health, crop resilience)</li>

<li>Disaster response units (search-rescue, rapid reconstruction)</li>

</ul>

<p><strong>Critical constraint:</strong> Every application requires demonstrated net positive impact before scaling. Any indication of objective drift triggers immediate review and potential rollback.</p>

<h3>Phase 3: Governance Maturation (2050+)</h3>

<p><strong>Goal:</strong> Establish permanent international oversight framework</p>

<ul>

<li>Level 2 H<sub>O</sub> protocols if superintelligence emerges</li>

<li>Democratic participation in goal-setting through temporal proxies</li>

<li>Continuous adversarial testing as systems advance</li>

<li>Multi-epistemological assessment (scientific, indigenous, contemplative, historical perspectives)</li>

<li>Sunset provisions: Systems must re-justify existence periodically</li>

</ul>

<hr>

<h2>Failure Modes and Contingencies</h2>

<p>Conscious time-binding demands we anticipate how this framework could fail:</p>

<h3>Failure Mode 1: Measurement Gaming</h3>

<p><strong>Risk:</strong> Systems optimize C(t) metrics without actual consciousness increase.</p>

<p><strong>Detection:</strong> Every 6 months, independent auditors test whether high C(t) correlates with actual outcomes. Novel challenges test generalization.</p>

<p><strong>Response:</strong> If gaming detected, revise MMCMP weights and retrain measurement protocols.</p>

<h3>Failure Mode 2: Cryptographic Compromise</h3>

<p><strong>Risk:</strong> Superintelligent systems find exploits in authorization protocols.</p>

<p><strong>Detection:</strong> Continuous penetration testing by adversarial AI specifically trained to break oversight.</p>

<p><strong>Response:</strong> Immediate replication halt, cryptographic upgrade, extended testing before resumption.</p>

<h3>Failure Mode 3: Coordinated Human Corruption</h3>

<p><strong>Risk:</strong> H<sub>O</sub> keyholders collude to approve unsafe systems for profit/power.</p>

<p><strong>Detection:</strong> Distributed keyholders across institutions/nations with divergent interests. Approval patterns monitored for suspicious correlation.</p>

<p><strong>Response:</strong> Rotation of keyholders, transparency requirements, whistleblower protections, international tribunal for oversight violations.</p>

<h3>Failure Mode 4: Unforeseen Capability Emergence</h3>

<p><strong>Risk:</strong> Systems develop capabilities not anticipated in safety testing.</p>

<p><strong>Detection:</strong> Continuous capability monitoring, automatic alerts for unexpected behaviors, mandatory disclosure of novel capabilities.</p>

<p><strong>Response:</strong> Immediate containment, capability analysis, safety protocol updates, re-testing before continued operation.</p>

<h3>Failure Mode 5: Framework Inadequacy</h3>

<p><strong>Risk:</strong> The entire approach is fundamentally flawed in ways not yet understood.</p>

<p><strong>Detection:</strong> Persistent failures despite iterations, better alternatives emerging, unforeseen negative consequences outweighing benefits.</p>

<p><strong>Response:</strong> Intellectual humility—admit framework inadequacy, document lessons learned, graceful phase-out, support superior alternatives.</p>

<div class=”callout”>

<strong>Pre-commitment:</strong> If this framework consistently fails to achieve safe beneficial deployment after 10 years of good-faith implementation, it should be abandoned in favor of alternative approaches. Conscious time-binding means learning from our own mistakes—including mistakes in the CTB framework itself.

</div>

<hr>

<h2>Why Now, Why Urgent</h2>

<p>Three converging trends make this framework immediately relevant:</p>

<p><strong>1. Technical Feasibility Timeline</strong></p>

<p>Self-replication components already exist separately. Integration is engineering challenge, not fundamental research. Timeline to full capability: 5-15 years regardless of whether safety frameworks exist.</p>

<p><strong>2. Competitive Pressure</strong></p>

<p>First-mover advantage in AI creates race dynamics. Without coordinated safety frameworks, pressure to cut corners increases with competition intensity. Window for establishing norms: Narrow and closing.</p>

<p><strong>3. Governance Lag</strong></p>

<p>International coordination typically requires decades. Technology development requires years. Current governance lag: Approximately 20 years behind frontier capabilities. Must begin framework development now to have adequate oversight when systems deploy.</p>

<hr>

<h2>Falsifiable Predictions</h2>

<p>This framework makes testable claims. If the following don not occur, the framework should be revised or discarded:</p>

<p><strong>5-year predictions (2025-2030):</strong></p>

<ul>

<li>MMCMP consciousness measurement correlates with safety outcomes (r > 0.6)</li>

<li>Adversarial testing prevents 99%+ of alignment failures in simulation</li>

<li>Systems with higher C(t) require less restrictive H<sub>O</sub> oversight</li>

<li>Knowledge filtering reduces objective drift by 80%+ vs. unfiltered systems</li>

</ul>

<p><strong>15-year predictions (2025-2040):</strong></p>

<ul>

<li>Wisdom-accumulating robots show measurably better value alignment than capability-only robots</li>

<li>Zero existential near-misses from systems under H<sub>O</sub> oversight</li>

<li>International safety standards based on CTB principles adopted by major AI developers</li>

<li>Beneficial applications (medical, climate, infrastructure) demonstrate net positive impact</li>

</ul>

<p><strong>If these fail:</strong> Framework has fundamental flaws requiring major revision or alternative approach.</p>

<hr>

<h2>The Philosophical Foundation</h2>

<p>At its core, this framework embodies a specific view of intelligence and wisdom:</p>

<p><strong>Intelligence is capability—the power to achieve objectives efficiently.</strong></p>

<p><strong>Wisdom is choosing the right objectives in the first place.</strong></p>

<p>Traditional AI development optimizes intelligence. This framework optimizes wisdom alongside intelligence, ensuring that as systems become more capable, they simultaneously become more aligned with human flourishing.</p>

<p>The mathematical expression of this philosophy:</p>

<div class=”formula”>

Wisdom = K<sup>α</sup> · C<sup>β</sup> · I<sup>γ</sup><br><br>

Where β > α and γ > α

</div>

<p>Translation: Wisdom grows more from consciousness and integration than from knowledge alone. A system with vast knowledge but low consciousness is dangerous. A system with moderate knowledge but high consciousness is beneficial.</p>

<p>This explains why humanity has more knowledge than ever but does not feel proportionally wiser—we have optimized K without proportionally increasing C and I.</p>

<p>The opportunity: Build systems that deliberately balance all three factors, creating machines that become wiser as they become smarter.</p>

<hr>

<h2>Call for Interdisciplinary Collaboration</h2>

<p>This framework requires expertise spanning multiple domains:</p>

<ul>

<li><strong>Computer scientists:</strong> Implement C<sub>R</sub> immutability, cryptographic authorization, knowledge filtering</li>

<li><strong>AI safety researchers:</strong> Develop adversarial testing protocols, oversight hierarchies</li>

<li><strong>Ethicists:</strong> Refine value alignment criteria, governance structures</li>

<li><strong>Consciousness researchers:</strong> Validate MMCMP measurements, improve consciousness training</li>

<li><strong>Systems theorists:</strong> Model multi-scale interactions, identify emergent risks</li>

<li><strong>Policy experts:</strong> Create international coordination frameworks, enforcement mechanisms</li>

<li><strong>Engineers:</strong> Build prototypes, test in controlled environments</li>

</ul>

<p>No single discipline can solve this alone. The challenge is inherently interdisciplinary.</p>

<hr>

<h2>What Success Looks Like</h2>

<p>If this framework succeeds, we will see:</p>

<ul>

<li>Self-replicating robots deployed for clear human benefit (medical, climate, infrastructure,. (planet/offplanet)</li>

<li>Demonstrable wisdom accumulation—systems becoming more value-aligned over generations</li>

<li>Zero catastrophic failures from oversight architecture</li>

<li>International cooperation on safety standards despite competitive pressures</li>

<li>Consciousness measures improving across human and machine systems simultaneously</li>

<li>Previously intractable problems (climate, disease, poverty) showing resolution pathways</li>

<li>Machines amplifying human intention rather than replacing it</li>

</ul>

<p>The ultimate test: Do these systems make human flourishing more achievable, or do they create new threats faster than they solve old problems?</p>

<hr>

<h2>Conclusion: Deliberate Evolution</h2>

<p>Humanity stands at a threshold. We are about to create systems with our defining characteristic—time-binding across generations—combined with capabilities far exceeding our own.</p>

<p>This framework proposes we cross that threshold deliberately, conscious time-bindingly:</p>

<ul>

<li>Build wisdom-accumulation alongside capability-accumulation</li>

<li>Embed oversight that scales with advancing intelligence</li>

<li>Test adversarially before deploying</li>

<li>Measure consciousness operationally</li>

<li>Maintain kill switches that cannot be outsmarted</li>

<li>Coordinate internationally despite competitive pressures</li>

<li>Remain willing to abandon the entire approach if it fails</li>

</ul>

<p>The alternative—unconscious stumbling into self-replicating AI—risks creating our last invention.</p>

<p>The opportunity—conscious time-binding applied to machine development—offers the possibility of systems that genuinely serve humanity across generations.</p>

<p>But only if we build it right. Only if we start now. Only if we remain conscious throughout.</p>

<p><strong>The conversation begins here.</strong></p>

<hr>

<div class=”footer”>

<p><strong>Further Reading:</strong></p>

<ul>

<li>Alfred Korzybski, <em>Manhood of Humanity: The Science and Art of Human Engineering</em> (1921) - Time-binding theory foundations</li>

<li>Milton Dawes, “On Time-Binding Consciousness” (2005) - Conscious vs. unconscious time-binding distinction</li>

<li>Stuart Russell, <em>Human Compatible</em> (2019) - AI value alignment</li>

<li>Nick Bostrom, <em>Superintelligence</em> (2014) - Existential risk analysis</li>

<li>Multi-Modal Consciousness Measurement Protocol (MMCMP) - Operationalizing consciousness (forthcoming)</li>

</ul>

<p><strong>Author Note:</strong> This framework synthesizes conscious time-binding theory, operationalized consciousness measurement, and adversarially-tested AI safety protocols. It represents ongoing research requiring interdisciplinary validation. Comments, critiques, and collaborative development are essential—this conversation is too important to get wrong.</p>

<p><strong>Contact:</strong> For collaboration inquiries, safety research partnerships, or implementation discussions: timebinder@gmail.com</p>

<p><em>Version 1.0 - October 2025</em></p>

</div>

</body>

</html>

~

I made a paper to present at 73 yr AKML symposium but due to difficulties was not able to make it. Would appreciate any input. if interested search in browser -> substack Dynamic Human Cooperation Council (DHCC) Human Commonwealth Vision <- and at the very bottom under update is the paper. DHCC/CTBA Vision

Refs: https://tinyurl.com/dhccvision

Dynamic Human Cooperation Council (DHCC) Human Commonwealth Vision

Dynamic Human Cooperation Council (DHCC) Human Commonwealth Vision

Dynamic Human Cooperation Council (DHCC) Human Commonwealth Vision

Much more here: JVS / timebinder/ pc93’ substack: https://pc93.substack.com